Automated Entity Resolution Pipeline for Record Matching

The client had thousands of records pulled from different sources, all slightly off. One record said “J. Smith,” another “John Smith,” a third had no first name at all. Multiply that by tens of thousands of people, and you’ve got chaos. No one knew which record was right, or if they were looking at the same person twice. And that meant bad reports, duplicate emails, missed insights, and wasted money.

They had tried to fix it with homegrown entity resolution algorithms. It used simple rules—match names exactly, compare phone numbers, things like that. It kind of worked, but only when the data was clean. It couldn’t deal with typos, missing info, or different formats. Worse, it broke down on large datasets and couldn’t be reused. Every tweak made it harder to maintain. So, they called us.

Key challenges of Entity Matching Project

Inconsistent Formats

Some records had “Smith, John.” Others said “John S.” Or just “J. Smith.” And sometimes—no name at all. Add in five ways to write a birthdate, typos, missing fields, and inconsistent address formatting, and you’ve got a mess. Normalizing this kind of data was not an easy task.

Record Matching Complexity

We couldn’t just say “if name = name, it's a match.” Real-world data doesn’t work like that. People write things differently, move, change emails. If we set the match threshold too low, we’d end up combining records that weren’t the same. Too high, and we’d miss real matches. We had to tune it just right—and it took work.

Tech Orchestration

Parts of the pipeline ran in Scala, others in Python. The whole thing had to live on AWS. And Airflow had to keep it all working—on schedule, in the right order, with logs we could actually trust. One broken link, and the whole process could stall or deliver bad data. It had to be tight.

Data Ingestion Bottlenecks

Once the data was cleaned and matched, we had to load it. And we’re talking millions of records, monthly. The challenge was in feeding all that into MariaDB without causing timeouts, failures, or locking up the database. Standard loading just couldn’t keep up. We had to build a smarter, chunked ingestion process that could scale without breaking things.

Our Approach: Entity Resolution Techniques That Worked for Us

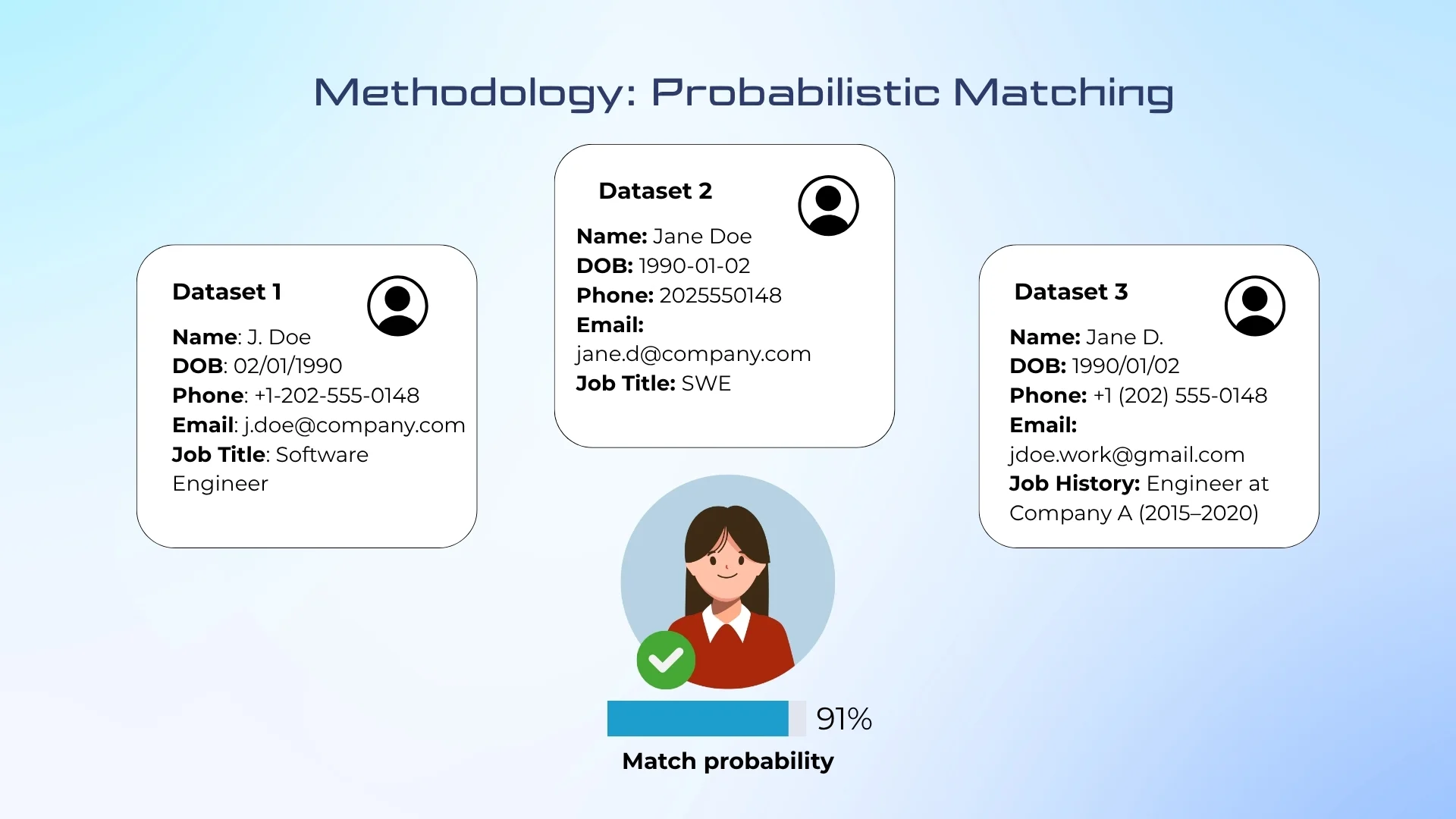

We used probabilistic record linkage with Splink as the core matching method. Unlike rule-based systems, it calculates the likelihood that two records refer to the same entity—even when fields don’t match exactly. We defined comparison rules for names, dates, phones, emails, jobs, education, and more. This let us catch real matches others miss—especially when data was messy, incomplete, or inconsistent. Matching was score-based, not binary. We reviewed borderline cases manually during the tuning phase, feeding them back into the model to improve future runs.

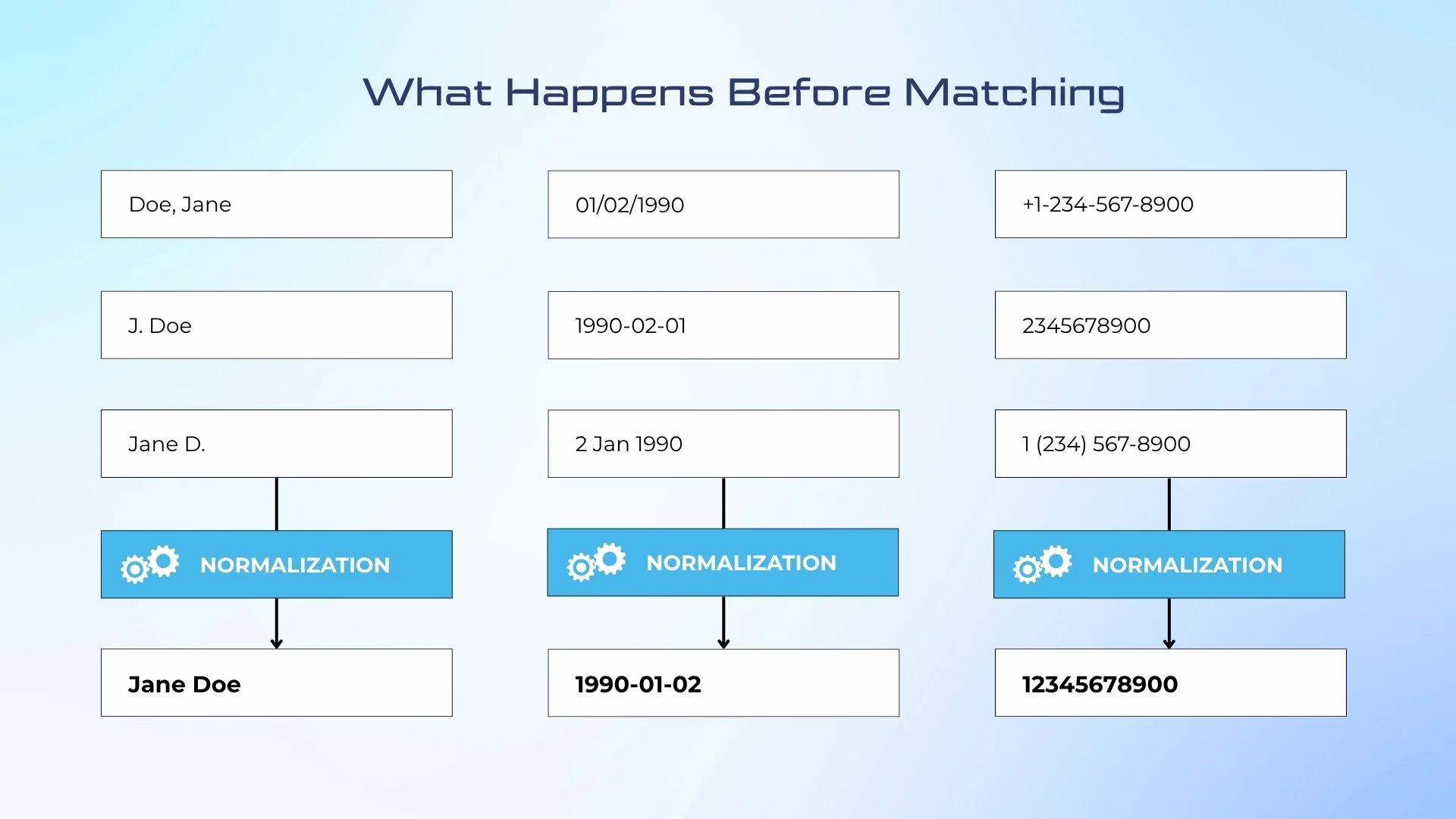

We cleaned and standardized every field before matching. Names came in every format imaginable—"Doe, Jane," "J. Doe," "Jane D."—so we stripped titles, aligned casing, and parsed name parts. Dates were unified to ISO. Phone numbers were reformatted. We normalized education and job history using reference lists. For addresses, we ran them through a third-party cleaning tool. All this ensured we compared records on real content.

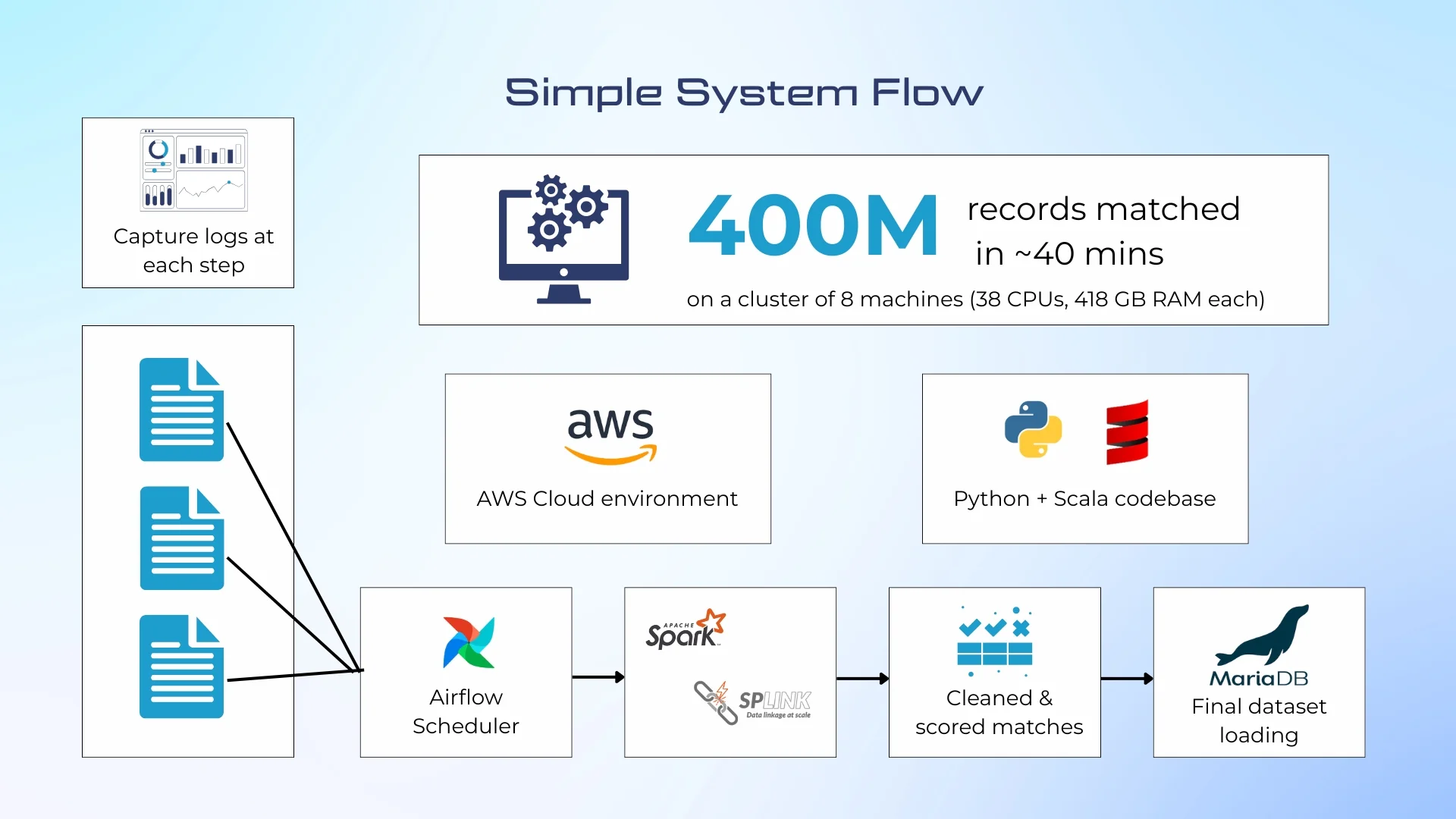

We built a fully automated pipeline using a modern, distributed stack. Apache Spark handled data-intensive operations, powering probabilistic matching with Splink. Airflow orchestrated the workflow—from ingesting raw data to launching Spark jobs, validating results, and loading final datasets into MariaDB. Logs were captured at each step to support traceability and debugging.

The implementation combined Scala and Python, each chosen for the part of the pipeline it fit best. Everything ran on AWS. The result: a repeatable, fault-tolerant system capable of processing millions of records on a consistent schedule—no manual intervention required. For example, on a cluster of 8 machines (38 CPUs, 418 GB RAM each), the system matched 400 million records in about 40 minutes.

Technologies We Used to Build the Entity Resolution Pipeline

Scala

Python

Airflow

AWS

Splink

Spark

MariaDB

The Entire Process Followed a Structured Flow

1. Normalization

We cleaned and standardized fields across the dataset—names, dates, phone numbers, job titles, education history, and addresses. This step aligned formatting across inconsistent sources and ensured comparability during matching.

2. Matching

Using Splink on Spark, we applied probabilistic record linkage across multiple fields. Each record pair received a match score, and those above the 85% threshold were treated as duplicates.

3. Dataset Formation

Matched records were consolidated into unified entries. Metadata was added to preserve traceability—so every final record could be tracked back to its original sources.

4. Ingestion

Final datasets were loaded into MariaDB via a custom-built, parallelized ingestion tool that supported large volumes and avoided performance bottlenecks.

The results: 76% Deduplication with 85%+ Confidence Threshold

The system processes millions of records per run and is fully automated to execute on a monthly schedule. The pipeline resolved 76% of duplicates using a high-confidence threshold—only matches scoring 85% or more were accepted.

- Deduplication Rate: 76%

- Matches required ≥85% probability

- 400 million records matched in 40 minutes on an 8-node Spark cluster

- Reuse Potential: High. The normalization and matching components can be reused in other projects with minor tweaks.

Make big data work for you

Reach out to us today. We'll review your requirements, provide a tailored solution and quote, and start your project once you agree.

Contact us

Complete the form with your personal and project details, so we can get back to you with a personalized solution.