In search of the best tool to extract data from PDF?

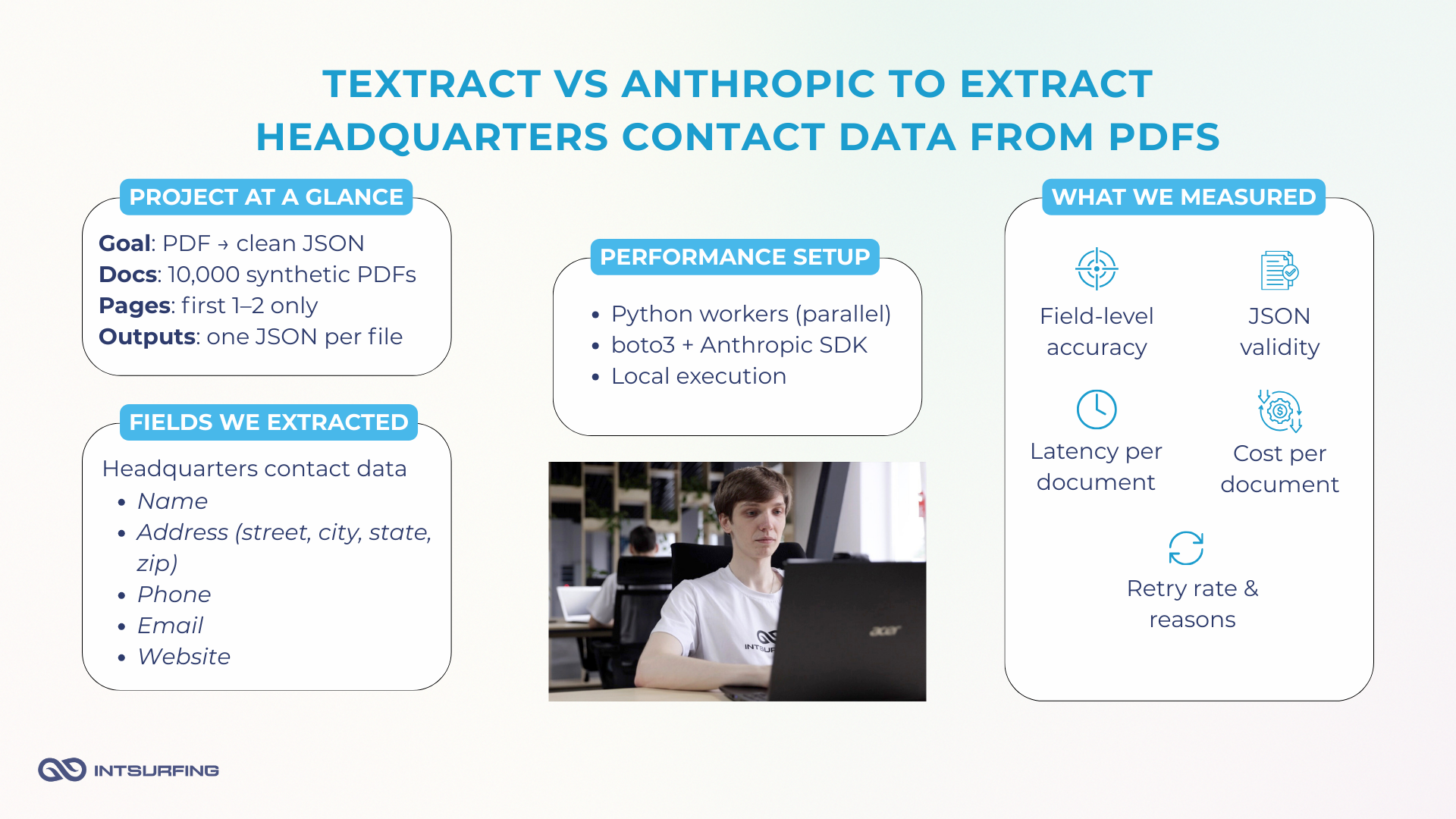

We benchmarked Amazon Textract against Anthropic Claude to extract specific data fields from the first two pages of PDF files.

Below, we show the setup, prompts/code, results, and a quick decision matrix.

How We Compared Textract vs Anthropic for PDF-to-JSON

We built an experiment pipeline to mirror real batch processing: 10,000 synthetically generated PDFs that mimic franchise “Headquarters” blocks with different layouts, address styles, noise levels, and both digital and scanned versions.

The goal was to extract these data fields and return a standardized JSON per document:

- Name

- Address (street, city, state, zip)

- Phone

- Website

We discovered that HQ contact details are usually located on the first two pages of the file. So, we processed only these pages.

This is an example of the test file from our collection. As you see, it has all the required fields on page one:

- Name: Meeple Harbor Board Game Club

- Address: 2210 1st Ave, Suite 300, Seattle, WA 98121, United States

- Phone: +1 206-555-0176

- Email: contact@meepleharbor.org

- Website: https://www.meepleharbor.org

Everything ran in Python. We used boto3 for Amazon Textract and Anthropic’s official client for Claude. Execution was local to cut out extra S3 hops. And we tested multithreaded runs to see how throughput and cost behave at the batch scale.

The flow was identical for every document:

- Load PDF.

- Keep pages 1–2 only.

- Send to Amazon Textract (Queries) with pre-defined field queries.

- Send the same pages to Anthropic Claude via a structured prompt.

- Collect outputs and save as JSON.

- Compare extraction quality, stability, and costs.

During the experiment, we measured:

- Field-level accuracy for name, address, phone, email, and website.

- Latency per document.

- Average cost per document.

- Retry behavior—how often we had to re-run when results were incomplete or the JSON didn’t validate.

Because the inputs, page scope, and schema were held constant, any differences you’ll see in the results come from the tools themselves.

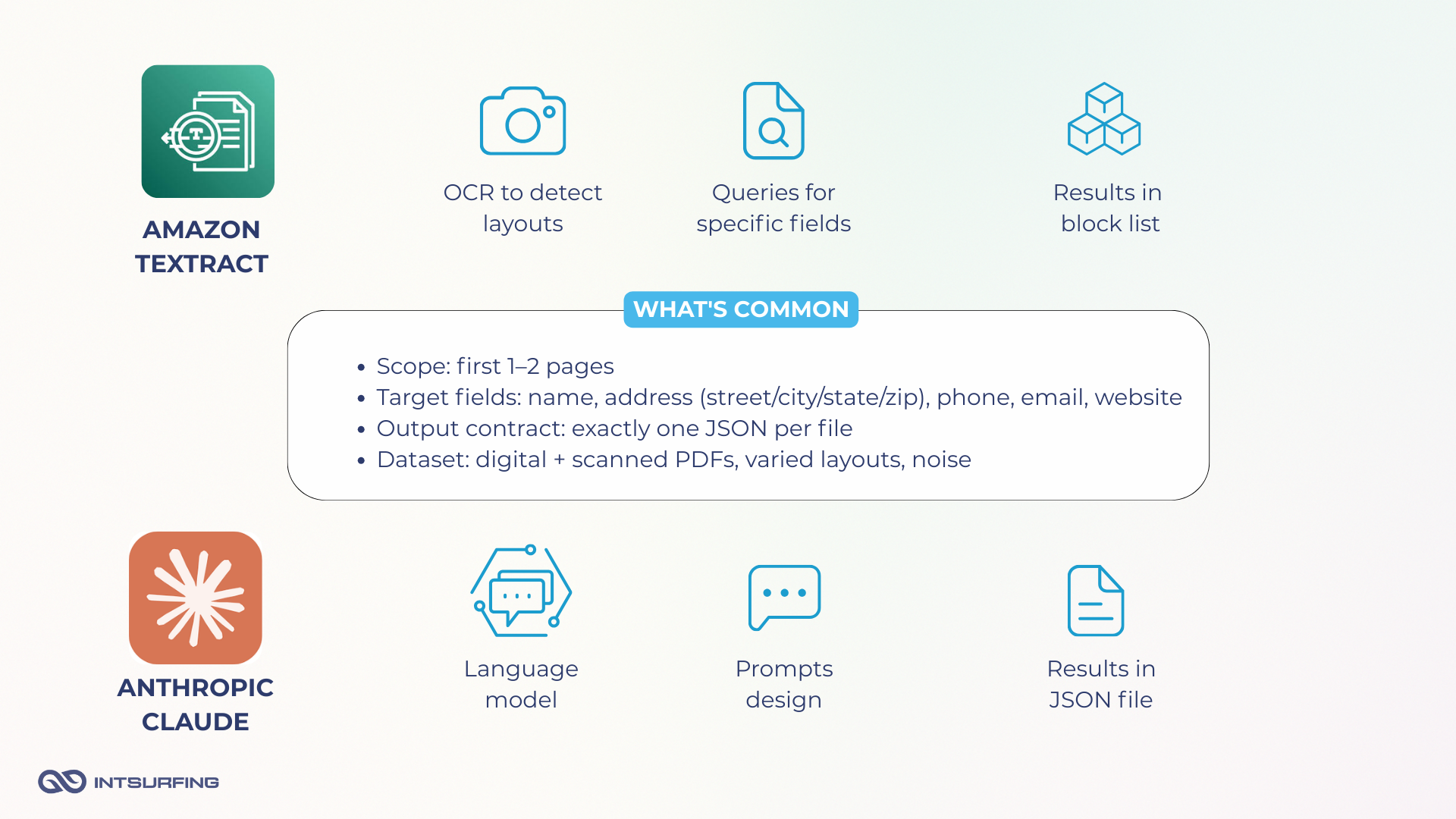

Two Approaches: Amazon Textract (OCR Queries) vs Anthropic Claude (LLM Prompts)

We evaluated two fundamentally different ways to turn PDFs into JSON.

Want to know how we extracted data from PDF to CSV? Click here.

Amazon Textract treats each file like an image. It runs OCR, detects layout, and lets you ask targeted Queries (e.g., “Headquarters email?”). For this, you prepare a set of Queries for the fields you need, then parse the API’s Blocks/Relationships to collect the answers.

Anthropic Claude, by contrast, is a language model. It reads the text from the PDF and, with prompt, “understands” where the desired block. So, with Claude, the work shifts to prompt design and output control.

Thus, Textract requires more technical customization and subsequent parsing of responses. Anthropic involves more work with prompt design and control of the output.

How We Used Textract to Pull Data from PDFs

To extract structured contact fields from PDFs using Amazon Textract, we went with the analyze_document API in Queries mode. This was a better fit than full-text OCR because we weren’t trying to capture all the content, just pull name, address, phone, email, and website from the first couple of pages.

We ran everything locally in Python, using boto3, the official AWS SDK.

Preparing the Input for Multi-Column PDF Data Extraction with Textract

Before calling the API, we first cut the PDF down to pages 1–2, then converted each page into byte streams. That let us skip writing to disk and talk directly to Textract.

We used the fitz library from PyMuPDF to handle page extraction and byte conversion:

Python

def pdf_pages_pdf_bytes(pdf_path: str, pages: List[int]) -> List[bytes]:

out: List[bytes] = []

with fitz.open(pdf_path) as src:

for p in pages:

if p < 1 or p > len(src):

continue

dst = fitz.open()

dst.insert_pdf(src, from_page=p-1, to_page=p-1)

buf = io.BytesIO()

dst.save(buf)

dst.close()

out.append(buf.getvalue())

return out

Query Design and Field Structuring: Amazon Textract Queries Examples

To extract the fields we needed with Textract, we built a custom set of Queries, each targeting a specific field and mapped to a unique alias. These aliases acted like keys for the final JSON, letting us nest fields under objects (for example, address.city or address.zipCode) without additional mapping later.

Here’s the actual query set we used:

Python

QUERIES = [

{"Text": "What is the name of the organization?", "Alias": "name"},

{"Text": "What is the full street address of the organization?", "Alias": "address.street"},

{"Text": "What is the city of the organization?", "Alias": "address.city"},

{"Text": "What is the state of the organization?", "Alias": "address.state"},

{"Text": "What is the ZIP of the organization?", "Alias": "address.zipCode"},

{"Text": "What is the organization's telephone number?", "Alias": "phone"},

{"Text": "What is the official email address?", "Alias": "email"},

{"Text": "What is the official website URL?", "Alias": "website"}

]

This gave us predictable, labeled outputs straight from the API. For instance, the value linked to address.city or address.zipCode in Textract’s response could be dropped directly into the corresponding JSON field—no renaming or reshuffling needed.

But here’s where things got interesting.

We revealed that the exact wording of each query impacted the results. For example, Textract returned irrelevant website URLs. In some cases, it was a generic footer link (“www.example.com”) instead of the actual site belonging to the organization.

That’s because Textract doesn’t “understand” intent. It just picks the first or most confident match it finds.

So, Textract’s Queries behave more like search instructions than comprehension. You have to guide them and be ready for post-processing or filtering when confidence scores don’t tell the full story.

Output: Textract Address Extraction Results

Textract returns everything in a large response dictionary, where the key data lives inside a list of Blocks. This includes both the raw OCR text and the QUERY_RESULT blocks—the actual answers to our field queries.

We collected all the Blocks into one structure using this snippet:

Python

response = client.analyze_document(

Document={"Bytes": pdf_bytes},

FeatureTypes=["QUERIES"],

QueriesConfig={"Queries": QUERIES}

)

blocks = response.get("Blocks", [])

all_blocks.extend(blocks)

To extract the final values, we wrote a small utility called _pick_alias. It searches through the block list for a given alias (e.g., “address.zipCode”), then finds the corresponding QUERY_RESULT block with the highest confidence score:

Python

def _pick_alias(blocks: List[Dict[str, Any]], alias: str) -> str:

alias_norm = (alias or "").strip().lower()

id_index = _index_blocks(blocks)

best_text, best_conf = "", -1.0

for b in blocks:

if b.get("BlockType") != "QUERY":

continue

q = b.get("Query") or {}

q_alias = (q.get("Alias") or "").strip().lower()

if q_alias != alias_norm:

continue

for rel in b.get("Relationships", []) or []:

if (rel.get("Type") or "").upper() in ("ANSWER", "ANSWERS"):

for ans_id in rel.get("Ids") or []:

ans = id_index.get(ans_id)

if not ans or ans.get("BlockType") != "QUERY_RESULT":

continue

t = (ans.get("Text") or "").strip()

c = float(ans.get("Confidence") or 0.0)

if t and c > best_conf:

best_text, best_conf = t, c

return best_text

This was a reliable way to filter out noise. Even when Textract returned multiple potential answers, we kept only the one with the highest confidence.

Here’s an actual result from one of our clean test files:

- name → Meeple Harbor Board Game Club

- address.street → 2210 1st Ave, Suite 300

- address.city → Santa Monica

- address.state → CA

- address.zipCode → 90405

- phone → +1 206-555-0176

- email → contact@meepleharbor.org

- website → www.meepleharbor.org

In this case, the output was ready to use. We didn’t need to reformat or correct anything. The aliases mapped directly into our JSON schema, and all fields were accurate.

When Post-Processing Was Needed

On more complex documents (i.e., scanned PDFs or layouts with non-standard formatting), issues started to show up. The most common ones:

- Street addresses sometimes dropped secondary qualifiers: Suite, Floor, or Unit, which made the address incomplete.

- Websites could be pulled from unrelated parts of the document, often footer links, legal disclaimers, or ad blocks, instead of the actual organization’s URL.

In these cases, we had to add extra logic to either correct the result, flag the data as partial, or skip the field if it couldn’t be trusted.

So, Textract gave us a deterministic and fast path to structured fields. But as expected, layout complexity and OCR limitations sometimes made accuracy uneven, especially in lower-quality scans or multi-column designs.

Textract Pricing: Predictable, Page-Based Costs

Amazon Textract uses a per-page pricing model. When using the AnalyzeDocument API in Queries mode, the rate is approximately $15 per 1,000 pages, or about $0.015 per page.

Since each document in our test set had two pages, the math worked out like this:

- 1 document (2 pages) → ~$0.03

- 1,000 documents → ~$30

- 10,000 documents → ~$300

The Textract pricing per page model is simple and predictable. There are also volume-based discounts. Once you cross the threshold of 1 million pages, the rate drops to $10 per 1,000 pages, a 33% reduction. So if you’re processing, say, 100,000 two-page documents, your cost would land around $3,000.

Textract Retry Behavior and Error Handling

Textract doesn’t offer a conversational retry mechanism. If a result is missing, incomplete, or incorrect, there’s no way to “ask again” within the same run. Instead, you have two options:

- Adjust the query wording and re-submit the document.

- Manually inspect the Blocks to see if the data exists elsewhere in the structure.

This can be inconvenient if you’re dealing with edge cases or ambiguous layouts. But it also means Textract never hallucinates or “fills in the blanks.” If a field isn’t found, it simply won’t return it.

In practice, this made Textract feel more stable but less forgiving. If your Queries are well-tuned and your layouts are consistent, you’ll rarely need retries. But if you’re dealing with varied formats or missing fields, you’ll either have to catch gaps downstream or re-run with a new query set.

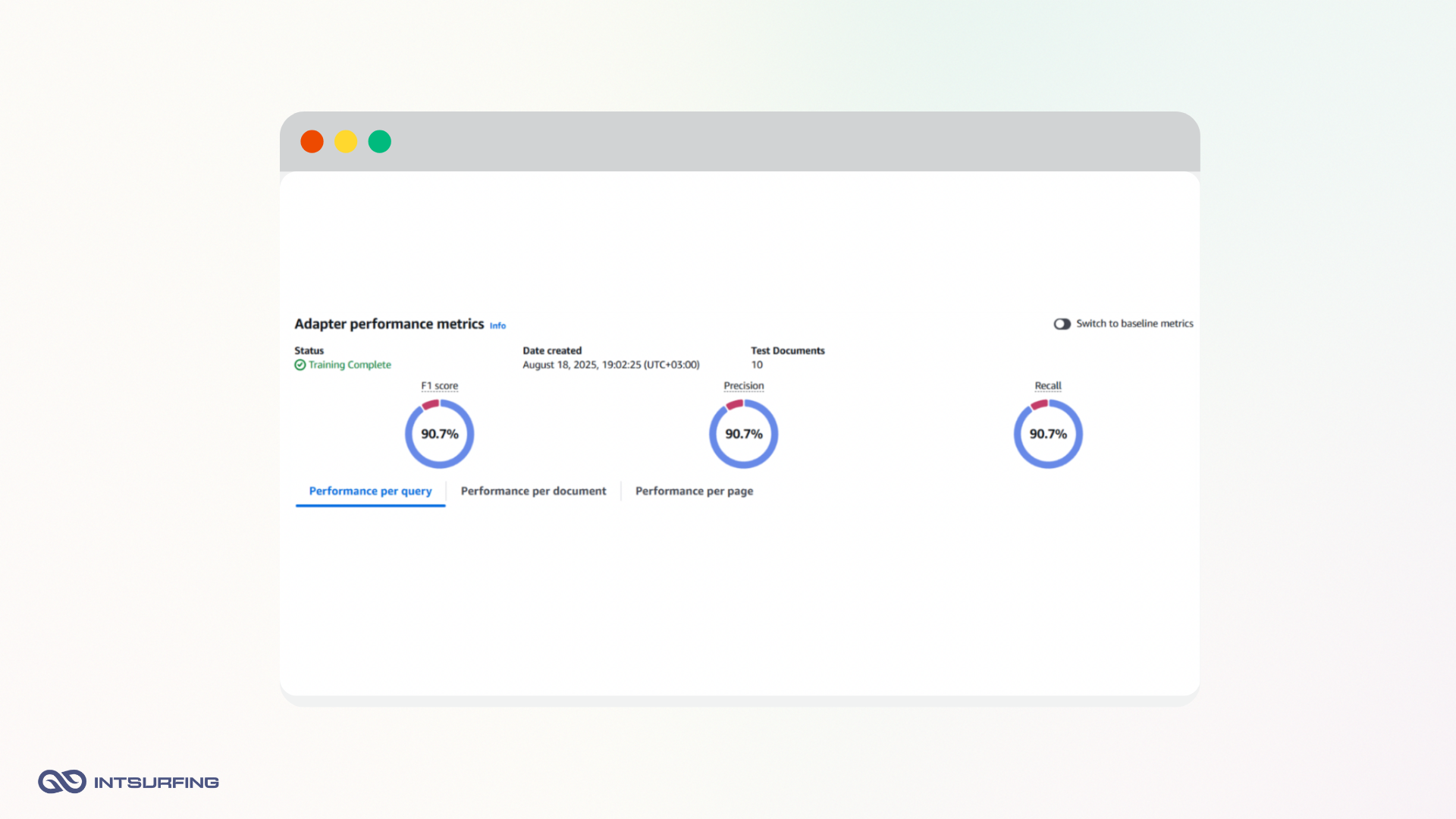

Custom Queries with Textract Adapters

Some fields consistently caused trouble in Textract’s default setup, even with well-written Queries. In particular:

- Street addresses often dropped important qualifiers: Suite, Unit, or Floor

- Websites sometimes pulled unrelated URLs from footers, disclaimers, or ads

Instead of rewriting all the queries, we looked for a smarter fix — Textract Adapters. This is a recently introduced feature that lets you fine-tune extraction for domain-specific cases using a labeled dataset.

How We Used Textract Adapters

We kept our original Queries but added a custom Adapter trained on our most problematic cases. Here’s how it worked:

- Created an Adapter in the AWS Console and received an

AdapterId - Uploaded a Training dataset: a curated set of PDFs where address suffixes were often missed or the wrong website was returned

- Uploaded a Test dataset: documents not used in training, to ensure generalization (not memorization)

- Ran training, which returned F1 Score, Precision, and Recall

Training time varied. Small datasets finished in under an hour, while larger ones took up to 30 hours.

Example post-training performance on our Test dataset:

These results meant the Adapter extracted full, clean street addresses and accurate website URLs.

How to Call Textract with Adapters

Using the Adapter is simple. Just add the AdaptersConfig when calling analyze_document:

Python

response = client.analyze_document(

Document={"Bytes": pdf_bytes},

FeatureTypes=["QUERIES"],

QueriesConfig={"Queries": QUERIES},

AdaptersConfig={

"Adapters": [

{"AdapterId": "your-adapter-id", "Version": "version-number"}

]

}

)

One of the key advantages of Adapters is that they’re incrementally trainable. If the first version underperforms (e.g. low recall on certain layouts), you can add more examples to your training/test sets and retrain. Over time, the Adapter becomes better at handling real-world layout variability, especially for difficult fields.

Textract Adapters come with their own pricing tier:

- $25 per 1,000 pages when using an Adapter in inference

- Training is free. You can create datasets and run training jobs without being charged

This does increase your cost compared to standard Textract ($0.015/page), but it’s a targeted way to improve precision, especially useful when you’re extracting high-value or compliance-critical fields.

Where Textract Fits Best

Here’s a breakdown of where Amazon Textract performed well, and where it struggled during our contact-data extraction tests.

|

Aspect |

Strengths |

Weaknesses |

|---|---|---|

|

Accuracy |

High precision on digital PDFs, especially for name, email, and phone fields |

Inconsistent results for full street address and website URLs |

|

OCR |

Automatically handles scanned PDFs without prep; strong at full-text and table extraction |

Sensitive to scan quality; may drop characters or qualifiers |

|

Structure |

Returns structured QUERY_RESULT blocks with confidence scores |

Requires manual linking between Query → Answer → Result to build final JSON |

|

Flexibility |

Queries can be fully customized |

Very sensitive to exact wording of questions |

|

Reliability |

Fully deterministic; no hallucinations — it only returns what it sees |

No retry or feedback loop — must re-run with adjusted queries |

|

Adapters |

Can improve weak fields (website/address) using training + retraining |

Adds cost ($25/1k pages); training takes 1–30 hours depending on dataset size |

Textract is a strong match when you’re processing large volumes of standardized PDFs with stable layouts. It’s especially effective when your priorities include:

- Consistent results you can trust

- Predictable pricing based on document size

- Automatic OCR for scanned files

- Reliable extraction of key fields like name, email, and phone

- Direct text and table output for broader parsing tasks

If your documents follow common patterns—franchise reports, financial statements, or HR forms—Textract with Queries or Adapters strikes a solid balance between quality, speed, and cost control.

How We Used Anthropic Claude for PDF-to-JSON Extraction

For the Anthropic Claude pipeline, we first had to extract text from the first two pages of each PDF.

Preparing the Input for Anthropic Claude PDF Extraction

We used the PyMuPDF (fitz) library to open each file, pull text page by page, and then wrap the content with explicit page markers. This helped keep the structure clear and guaranteed the model would only analyze the intended pages.

Here’s the function we used:

Python

def parse_pdf(pdf_path, page_range=None):

doc = fitz.open(pdf_path)

total_pages = doc.page_count

all_pages_content = []

for page_num in sorted(page_range):

page = doc[page_num - 1]

text = page.get_text()

page_content = f"--- Page {page_num} ---\n{text.strip()}\n--- End Page {page_num} ---\n"

all_pages_content.append(page_content)

doc.close()

return "\n".join(all_pages_content)

The output was a single text block, where each page was wrapped with --- Page 1 --- ... --- End Page 1 ---. This structure gave us two benefits:

- It was obvious which content came from which page.

- It allowed us to enforce the “analyze only the first two pages” rule inside the prompt.

Sending Text to Claude

Once the text was ready, we sent it to Anthropic Claude along with a system prompt that defined the extraction rules. For clarity, here’s a minimal version of the code (without retries or validation logic):

Python

client = anthropic.Client(api_key=API_KEY)

SYSTEM_PROMPT = get_prompt()

def analyze_text_simple(pdf_text: str) -> str:

resp = client.messages.create(

model=MODEL_NAME,

max_tokens=1024,

system=SYSTEM_PROMPT,

messages=[{"role": "user", "content": pdf_text}],

)

return resp.content[0].text

This setup kept the workflow simple:

- Extract PDF text with markers →

- Pass it to Claude with a structured system prompt →

- Receive a JSON output describing the contact details.

At this stage, the pipeline didn’t include retries or validation yet. We had a straight text-to-JSON call, enough to prove the concept.

Prompt Design for Stable JSON Output

Unlike Textract, Anthropic Claude doesn’t work with predefined queries. It relies entirely on how you phrase the system prompt. To get good results, we had to be explicit about three things:

- Scope: analyze only the first two pages

- Focus: extract only the headquarters contact block

- Format: return exactly one JSON object in a fixed schema

We also added fallback instructions. If a field was missing, the model should return data_found: false along with a reason and any partial data.

Here’s a shortened example of the system prompt we used:

Python

You are a STRICT headquarters information extractor.

Analyze only page 1 or 2 of the PDF text and return exactly ONE JSON object in this schema:

{

"data_found": true/false,

"name": "...",

"address": {

"street": "...",

"city": "...",

"state": "...",

"zipCode": "..."

},

"phone": "...",

"email": "...",

"website": "..."

}

Rules:

- Extract ONLY the official headquarters contact details of the organization.

- Ignore unrelated or "noise" data such as external URLs, disclaimers, footers, or references to regulators (e.g., government websites).

- If multiple candidates are present, choose the most complete and relevant block of HQ details.

- If any required field is missing, return:

{

"data_found": false,

"reason": "...",

"partial_data": {...}

}

On top of that, we reinforced a few strict guardrails:

- Only include HQ contact data: name, address, phone, email, website

- Ignore everything else: financial tables, franchisee lists, fee schedules, legal disclaimers, or table of contents

- One answer per document: the output must describe the single most relevant headquarters, never multiple entries

This way, the model consistently produced clean, JSON-only output, ready to drop into the pipeline without extra parsing.

Output: Raw Results from Claude

When we sent the extracted PDF text into Claude with our strict prompt, the model returned structured JSON right away — no need for block parsing or post-processing like with Textract. The output could be stored directly in a database or file.

Here’s an example of a raw first result, straight from the model:

{

"data_found": true,

"name": "Meeple Harbor Board Game Club",

"address": {

"street": "2210 1st Ave, Suite 300",

"city": "Seattle",

"state": "WA",

"zipCode": "98121"

},

"phone": "+1 206-555-0176",

"email": "contact@meepleharbor.org",

"website": "https://www.meepleharbor.org"

}

While the model usually returned valid JSON, there were cases where the output was either broken or incomplete.

For example,

- Accurate: name, address, phone — these fields were stable across most documents.

- Less stable: email — sometimes missing entirely.

- Most problematic: website; Claude sometimes “invented” a URL or pulled in irrelevant links from footers or disclaimers.

To solve this, we implemented a two-step retry logic with JSON and business validation. You’ll learn how exactly it worked in the next sections.

Cost: Token-Based Pricing with Claude 3 Haiku

We used Claude 3 Haiku (2024-03-07) — the smallest and most affordable model in the Claude family. Unlike Textract, which charges per page, Claude’s pricing is token-based:

- Input tokens: $0.25 per 1M

- Output tokens: $1.25 per 1M

That means the actual cost depends on how much text your PDFs contain. A sparse, two-page form might use fewer tokens; a dense policy document might use more.

Since our documents were short and we asked for only a small JSON back, the cost per file was extremely low.

On average, a two-page PDF converted to around:

- 4,000 input tokens (the page text)

- 200 output tokens (the JSON object)

Cost calculation per document:

- Input: 4,000 × $0.00000025 = $0.001

- Output: 200 × $0.00000125 = $0.00025

- Total per document (2 pages): ~$0.00125

That’s more than 20× cheaper than Textract’s $0.03 per two-page document.

Here’s what that looks like at different scales:

- 1 document (2 pages): ~$0.00125

- 1,000 documents: ~$1.25

- 10,000 documents: ~$12.50

At larger volumes, the cost advantage compounds. Where 100,000 two-page documents would cost around $3,000 with Textract, the same set would run for only about $125 with Claude.

To keep budgets predictable, Anthropic provides a dedicated /messages/count_tokens endpoint. With it, you can measure the token number before running a batch job.

In practice, this gave us a simple rule of thumb: the JSON itself is cheap, the input text dominates the bill. As long as documents stay in the same size range, you can plan confidently.

Retry and Self-Analysis with Claude

One of the key differences between Textract and Anthropic is how you handle errors. With Textract, you have to re-run queries from scratch if something goes wrong. Claude, on the other hand, allows for a more interactive correction cycle. You can tell the model what was wrong and give it another chance to fix the output.

We implemented a system of automatic retries with two validation layers:

- JSON structure check. If the model returned broken JSON (e.g. missing commas or quotes), we ran

validate_json_structure().

In the retry prompt, we told the model:

“Your previous response contained invalid JSON. Please return only valid JSON in the required schema.”

- Business data validation. If the JSON was syntactically valid but the content didn’t pass checks (e.g. email missing @, phone too short, address incomplete), we flagged the issues and sent them back in a retry prompt. This gave the model a second chance to correct its mistakes.

Thanks to this two-level retry system (syntax + data validation), we could force Claude to deliver production-ready results without manual cleanup. It meant that even when the model slipped — either structurally or semantically — the pipeline corrected it automatically.

The best way to understand how retries worked in practice is to look at a real case.

Claude’s first attempt looked like this:

Json

{

"data_found": true,

"name": "ABC Organization",

"address": {

"street": "123 Main St",

"city": "Orlando",

"state": "",

"zipCode": ""

},

"phone": "555-1234",

"email": "not-an-email"

}

At first glance, this looks fine: the JSON format is correct, and the fields are all there. But when we applied our validation rules, we saw this:

- The email is not valid (missing “@”).

- The phone number is too short to be real.

- The state and zipCode fields are empty.

So, the data was not usable, and we gave Claude our feedback:

Your previous response contained validation errors.

Please correct them and return ONLY valid JSON in the required schema.

Issues to fix:

- Provide a valid email format (must include “@”).

- Provide a valid phone number (minimum 10 digits).

- Address must include state and zipCode.

Here’s what Claude returned after the retry:

Json

{

"data_found": true,

"name": "ABC Organization",

"address": {

"street": "123 Main St",

"city": "Orlando",

"state": "FL",

"zipCode": "32801"

},

"phone": "(407) 555-1234",

"email": "info@abc.org",

"website": "www.abc.org"

}

This time, everything passed validation:

- The email is in the correct format.

- The phone number has the right length.

- The address now includes both state and zip code.

- A proper website was also added.

How It Worked in Code

To make retries effective, we preserved context from the previous answer. Each time we retried, we added the model’s last response to the conversation history, followed by our feedback prompt:

Python

messages.append({

"role": "assistant",

"content": response_text

})

messages.append({

"role": "user",

"content": retry_message

})

This ensured the next response wasn’t generated from scratch, but rather as a correction of the earlier output. In practice, this approach significantly improved stability and reduced hallucinations.

Cost Implications

Every retry uses more tokens — both input and output — which directly increases the cost. In our runs:

- If the model produced valid output on the first try, the cost was about $0.00125 per document.

- If it needed 2–3 retries, the price could climb 2–3× higher.

But in return, you get a usable JSON with correct data.

Alternative Input Strategies for Anthropic

In most cases, extracting the first two pages of a PDF as plain text with PyMuPDF (fitz) is enough for Claude to process. But not all PDFs behave the same way. Depending on the source, you may need to adjust your approach.

1. When the PDF is image-based (scanned documents)

Some PDFs don’t actually contain text at all — they’re just scans of paper documents. If you try to parse them with fitz or PyPDF2, you’ll get an empty string because there’s no embedded text layer.

In these cases, the fix is to run OCR (Optical Character Recognition) first. A common setup is to use pdf2image to convert PDF into images, then pytesseract to recognize the text. This restores a usable text layer, which you can then pass into Claude.

We’ve covered a step-by-step example of this approach here: PDF-to-text conversion using pdf2image and pytesseract.

2. When the PDF contains structured data (tables, schedules, financial reports)

Plain-text parsing also has limits when a PDF contains structured elements: tables, price lists, or schedules. If you flatten them into text, key relationships are lost:

- Tables lose rows and columns and merge into a stream of numbers and labels

- Financial data (e.g. expenses vs. years) loses the pairing

- Price lists or schedules lose their layout, so it’s no longer clear what’s a product vs. what’s a price, or what’s an event vs. what’s a time

When this happens, an LLM may misinterpret the data or generate misleading results.

For these scenarios, it’s better to send the PDF directly to Anthropic as a document object. That way, the model can preserve the structure and return it back in a more usable format — for example, as a Markdown table or structured JSON.

Here’s a minimal example of how that works:

Python

client = anthropic.Client(api_key=API_KEY)

SYSTEM_PROMPT = get_prompt() # strict prompt with schema

def analyze_structured_document(pdf_b64: str) -> str:

content = [

{

"type": "document",

"source": {

"type": "base64",

"media_type": "application/pdf",

"data": pdf_b64

}

}

]

resp = client.messages.create(

model=MODEL_NAME,

max_tokens=2000,

system=SYSTEM_PROMPT,

messages=[{"role": "user", "content": content}],

)

return resp.content[0].text

Where Anthropic Claude Fits Better

In short, Anthropic is a great fit when you need flexibility and low per-document costs, and when JSON-first output is more important than deterministic precision.

Claude 3 Haiku is an excellent choice for:

- Multi-format or inconsistent PDFs where layouts are unpredictable

- Use cases that benefit from semantic understanding instead of rigid queries

- Teams that want immediate JSON output without building complex parsing logic

But there are caveats to keep in mind:

- Expect occasional retries, which can raise costs

- Be ready for instability in email and website fields

- Budgeting at large scale is trickier, since pricing depends on token count rather than page count

|

Aspect |

Strengths |

Weaknesses |

|---|---|---|

|

Accuracy |

Consistently strong on name, address, phone |

Email often missing; website the least reliable (sometimes fabricated) |

|

Flexibility |

Can adapt to PDFs with very different layouts or structures |

Prone to “hallucinating” values when data is unclear |

|

Format |

Returns JSON directly in the required schema — no block parsing needed |

JSON sometimes broken (missing commas/quotes) → requires retry |

|

Retry |

Can be guided interactively: “fix this field” → corrected output |

Every retry uses extra tokens → cost per doc increases |

|

Cost |

Extremely cheap at scale (~$0.00125 per two-page doc) |

If retries are common, price may rise 2–3× |

|

Scale |

Scales well thanks to low cost and fast response times |

Less predictable cost than Textract (depends on token count, not page count) |

Anthropic VS Textract: How the Two Approaches Compare

Let’s put both pipelines side by side.

Textract feels like a traditional enterprise tool: reliable, deterministic, and very good at OCR-heavy workflows. Once you’ve set up the Queries and built your parsing logic, it runs with consistency. But you’ll spend more time configuring and more money per page.

Anthropic is more flexible. You give it text and a schema, and it gives you structured JSON right away. It’s faster to set up and cheaper per document, but you need guardrails. Without validation and retries, you risk broken JSON or fabricated fields.

At scale, the choice depends less on “which is better” and more on what kind of documents you’re dealing with. If your PDFs follow predictable patterns, Textract gives you peace of mind with deterministic results. If your PDFs are messy, inconsistent, or come from many different sources, Claude’s semantic understanding and JSON-first output save time and engineering effort.

|

Criteria |

Textract |

Anthropic |

|---|---|---|

|

Setup difficulty |

Requires AWS SDK setup, Queries definition, and parsing of complex Block JSON |

Easier to start: prepare text + prompt, model returns JSON immediately |

|

Output quality |

Accurate and deterministic, but often incomplete (especially email, website) |

More flexible JSON, but sometimes hallucinates values or skips fields |

|

Post-processing |

Mandatory parsing of Blocks & Relationships with custom logic |

Minimal — output is already in the right JSON schema |

|

Retry ability |

No retries; only option is re-running with new Queries |

Supports retries — can be told to fix invalid JSON or incorrect fields |

|

Cost (per doc) |

~$0.03 per two-page document |

~$0.00125 per two-page document. Very cheap, but retries can raise it 2–3× |

|

Best fit use case |

Best for large-scale, standardized PDFs, where stability and OCR are key |

Best for variable, unstructured PDFs, when fast setup and JSON-first output matter |

Conclusion

For most teams, the real choice comes down to your documents and your priorities. If you value determinism and OCR, Textract is the way to go. If you need flexibility, speed of setup, and scale at low cost, Claude offers a compelling alternative. In practice, many pipelines may even benefit from using both — Textract for scanned inputs and Anthropic for digital text — to balance reliability with agility.